I am pleased to report that the stream of data I have been collecting off the insertable device can be used to detect different user gestures. Gestures you ask? Well, I want to be able to tell what the user is doing with the device (e.g. blowjob, licking, hand job and penetrative buttsex). Not sure if I can tell the difference between a vagina and a butt, but why not try?

The video below shows the device distinguishing between blow jobs and hand jobs.

The detection algorithm is taken from the excellent Kinect DTW implementation.

Dynamic Time Warping (or DTW) is a great little solution for matching time-series data where the data points might be stretched or squashed over time. Apart from detecting blow jobs it has been used for speech recognition among other things.

I retrieve the stream of 8 data points from the device, let’s call a collection of these 8 data points a set. I get a set every 100 ms. When I have more than 8 sets of data, I attempt to match those 8 sets of 8 data points to a prerecorded “example” sequence of 32 sets in memory using the Dynamic Time Warping algorithm.

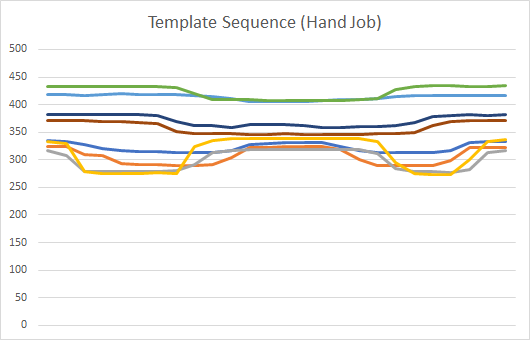

The image below is an example sequence of a “hand job” gesture:

Because lube and other environmental factors can change the baseline considerably, I “normalise” the data to a value from 0 to 1 for each independent stream (line) of data. This seems to be a pretty robust approach.

Normalising in this way is helpful, especially if you consider that the conductive silicone will also have a capacitance and due to my imperfect construction process, I can’t guarantee this will be consistent in any way.

A last step (which I haven’t yet tried but will shortly) is to apply a simple moving average to smooth the data out. This should result in more reliable matches.

I have been able to get this same algorithm working in C# as well as Renpy, a Python based gaming engine I intend to use for a game that interacts with the device. Unfortunately Renpy is a little too slow for the processing and is not really designed for this kind of real-time processing anyway.

Next step: move the gesture recognition processing onto the arduino itself for performance reasons. Turns out there’s not enough memory to implement a DTW algorithm for more than one gesture on an arduino. Never mind, I learnt how to do threads in Renpy instead (renpy.invoke_in_thread).

One thought on “Insertable gesture detection using DTW”